Simulating Latency in ASP.NET Core

When we're doing web development on our local machines, we usually don't experience any network latency. Web pages open nearly instantaneously, given we're not doing any long-running database or network operations. Because of that, the entire application tends to feel blazingly fast.

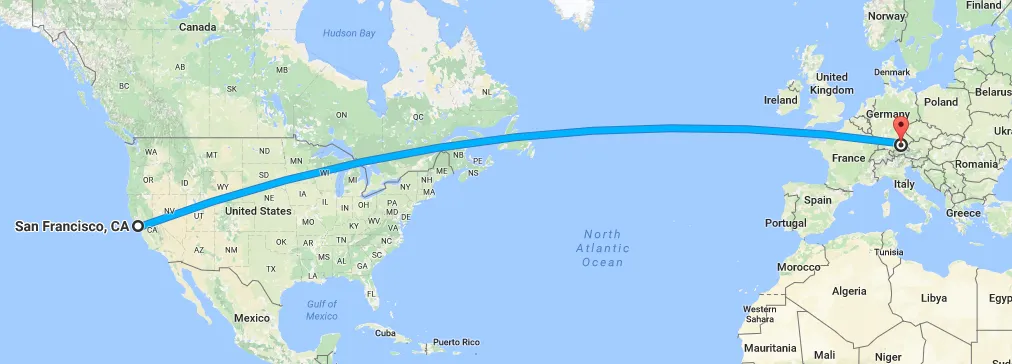

Of course, this responsiveness is in no way representative of the actual performance characteristics of the web application in production. Users remotely accessing the website encounter network latency with every HTTP request. Initial page loads take longer to complete, and so does every subsequent AJAX request. Generally speaking, the further away the server, the higher the latency.

This whole difference in performance characteristics got me thinking: Why not simulate network latency when doing local development? It's going to be there in production anyway, so we might as well experience it when developing to get a more realistic feeling of the actual application behavior.

Latency Middleware for ASP.NET Core #

In ASP.NET Core, every request is processed by the HTTP request pipeline, which is composed of various pieces of middleware. To simulate network latency, we can register a piece of custom middleware at the very beginning of the pipeline that delays the request processing. That way, every request — no matter if short-circuited by other middleware or not — is artificially slowed down.

Here's the beginning of the Configure method of the web application's Startup class:

public void Configure(IApplicationBuilder app, IHostingEnvironment env)

{

if (env.IsDevelopment())

{

app.UseSimulatedLatency(

min: TimeSpan.FromMilliseconds(100),

max: TimeSpan.FromMilliseconds(300)

);

}

// ...

}Our latency middleware is added by the UseSimulatedLatency method, which we'll look at in a minute. We pass it a lower and an upper bound for a random delay, which in this case is between 100ms and 300ms long.

Note that the latency is only simulated in development environments. After all, we don't want to slow down our web applications in production. Let's now look at the UseSimulatedLatency extension method:

public static IApplicationBuilder UseSimulatedLatency(

this IApplicationBuilder app,

TimeSpan min,

TimeSpan max

)

{

return app.UseMiddleware(

typeof(SimulatedLatencyMiddleware),

min,

max

);

}Nothing interesting to see here. We specify which type of middleware to use and which arguments (in addition to the next RequestDelegate) to pass to its constructor. All the logic is implemented within the SimulatedLatencyMiddleware class:

using System;

using System.Threading;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Http;

public class SimulatedLatencyMiddleware

{

private readonly RequestDelegate _next;

private readonly int _minDelayInMs;

private readonly int _maxDelayInMs;

private readonly ThreadLocal<Random> _random;

public SimulatedLatencyMiddleware(

RequestDelegate next,

TimeSpan min,

TimeSpan max

)

{

_next = next;

_minDelayInMs = (int)min.TotalMilliseconds;

_maxDelayInMs = (int)max.TotalMilliseconds;

_random = new ThreadLocal<Random>(() => new Random());

}

public async Task Invoke(HttpContext context)

{

int delayInMs = _random.Value.Next(

_minDelayInMs,

_maxDelayInMs

);

await Task.Delay(delayInMs);

await _next(context);

}

}Because the Random class is not thread-safe, we wrap it within a ThreadLocal<T> so that every thread has its own instance. Within the Invoke method, a pseudo-random duration between min and max is calculated and passed to Task.Delay, which slows down the processing of that request. Finally, the next piece of middleware in the pipeline is executed.

It's a simple trick, really, but it might help you get a better sense of how your web application feels under realistic circumstances. Take a look at your monitoring tool of choice, figure out the average latency for an HTTP request in production, and adjust the min and max bounds for the random delays accordingly.